三 Hive 自定义函数UDF和Transform

开篇提示:

快速链接beeline的方式:

./beeline -u jdbc:hive2://hadoop1:10000 -n hadoop

1.自定义函数UDF

当Hive提供的内置函数无法满足你的业务处理需要时,此时就可以考虑使用用户自定义函数(UDF:user-defined function)

UDF 作用于单个数据行,产生一个数据行作为输出。(数学函数,字符串函数)

2开发实例

2.1 原始数据格式

{"movie":"1193","rate":"5","timeStamp":"978300760","uid":"1"}

{"movie":"661","rate":"3","timeStamp":"978302109","uid":"1"}

{"movie":"914","rate":"3","timeStamp":"978301968","uid":"1"}

{"movie":"3408","rate":"4","timeStamp":"978300275","uid":"1"}

{"movie":"2355","rate":"5","timeStamp":"978824291","uid":"1"}

{"movie":"1197","rate":"3","timeStamp":"978302268","uid":"1"}

{"movie":"1287","rate":"5","timeStamp":"978302039","uid":"1"}

{"movie":"2804","rate":"5","timeStamp":"978300719","uid":"1"}

{"movie":"594","rate":"4","timeStamp":"978302268","uid":"1"}

{"movie":"919","rate":"4","timeStamp":"978301368","uid":"1"}

{"movie":"595","rate":"5","timeStamp":"978824268","uid":"1"}

{"movie":"938","rate":"4","timeStamp":"978301752","uid":"1"} 2.2 创建数据表

create table t_rating (line string)

row format delimited; 2.3 导入数据

load data local inpath '/home/hadoop/rating.json' into table t_rating;

2.4 开发UDF程序

package cn.itcast.hive;import org.apache.hadoop.hive.ql.exec.UDF; import org.codehaus.jackson.map.ObjectMapper;/*** @author ntjr* 解析json数据**/ public class PaserJson extends UDF {private ObjectMapper mapper = new ObjectMapper();public String evaluate(String line) {try {RatingBean ratingBean = mapper.readValue(line, RatingBean.class);return ratingBean.toString();} catch (Exception e) {// TODO Auto-generated catch block e.printStackTrace();}return "";} }

用于解析t_rating表中每一行的json数据。

package cn.itcast.hive;public class RatingBean {private String movie;private String rate;private String timeStamp;private String uid;public String getMovie() {return movie;}public void setMovie(String movie) {this.movie = movie;}public String getRate() {return rate;}public void setRate(String rate) {this.rate = rate;}public String getTimeStamp() {return timeStamp;}public void setTimeStamp(String timeStamp) {this.timeStamp = timeStamp;}public String getUid() {return uid;}public void setUid(String uid) {this.uid = uid;}@Overridepublic String toString() {return movie + "\t" + rate + "\t" + timeStamp + "\t" + uid;}}

2.4将udf程序打成jar 导入hive

add JAR /home/hadoop/udf.jar;

2.5 创建临时函数与开发好的udf进行关联

create temporary function paseJson as 'cn.itcast.hive.PaserJson';

2.6 创建完整字段的t_rating02表(用于存放将单列json数据表t_rating转换成多列数据表t_rating02的结果)

create table t_rating02 as select split(paseJson(line),'\t')[0] as movieid, split(paseJson(line),'\t')[1] as rate, split(paseJson(line),'\t')[2] as timestring, split(paseJson(line),'\t')[3] as uid from t_rating;

至此:完成字段表t_rating02转换完成。

3.利用Transfrom将t_rating02表中的timestring字段转换成周几的形式。

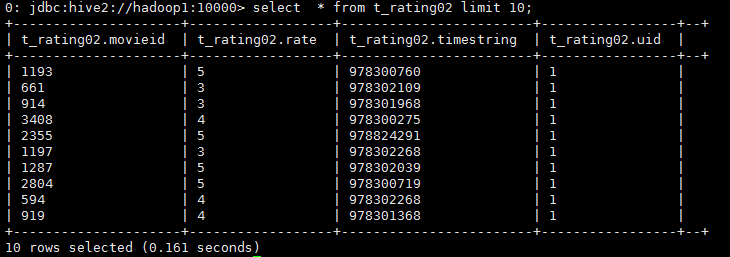

3.1 t_rating02中的样式:

3.2编写weekday_mapper.py脚本,处理t_rating02表中的timestring字段

#!/bin/python import sys import datetimefor line in sys.stdin:line = line.strip()movieid, rating, unixtime,userid = line.split('\t')weekday = datetime.datetime.fromtimestamp(float(unixtime)).isoweekday()print '\t'.join([movieid, rating, str(weekday),userid])

3.3 上传weekday_mapper.py脚本,前提是保证本机装有python

add FILE weekday_mapper.py;

3.4 创建新表t_rating_date,保存脚本处理后的数据

create TABLE t_rating_date as SELECTTRANSFORM (movieid , rate, timestring,uid)USING 'python weekday_mapper.py'AS (movieid, rating, weekday,userid) FROM t_rating02;

3.5查看t_rating_date表

至此将json数据转换成数据表。