大理如何做百度的网站百度点击软件名风

程序通过流来管理并发,每个流按顺序执行操作,不同流之间可能并行也可能乱序执行。流的作用是使一个流的计算与另一个流的传输同时进行,能有效提高对GPU的利用率。

流的定义方法,是创建一个cudaStream_t对象,并在启动内核和进行memcpy时将该对象作为参数传入。

在运用流时必然会用到异步执行,异步执行就是CPU主机端的API函数和内核函数启动的结束和GPU端真正完成这些操作是异步的。通过函数的异步,CPU可以在GPU端进行运算或数据传输时进行其他操作,更加有效地利用系统中的计算资源。

在用流进行处理时,流处理的对象必须为pinned memory才能实现并行;

流处理有几个API函数:

cudaStreamCreate,创建流;

cudaStreamDestory,释放流;

cudaStreamQuery,查询流完成的状态,所有都完成返回cudaSuccess;否则返回cudaErrorNotReady;

cudaStreamSynchronize,等待所有流任务的完成;

流在CUDASDK中有一个简单的例子samplestream:

#include<stdlib.h>

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <stdio.h>

#include<string.h>__global__ void init_array(int *g_data, int *factor, int num_iterations)

{int idx = blockIdx.x*blockDim.x + threadIdx.x;for (int i = 0; i < num_iterations; i++)g_data[idx] += *factor;

}int correct_data(int *a, const int n, const int c)

{for (int i = 0; i < n; i++){if (a[i] != c){printf("%d:%d %d\n", i, a[i], c);return 0;}}

}int main(int argc, char *argv[])

{int CUDA_device = 0;int nstream = 4;int nreps = 10;int n = 6 * 1024 * 1024;int nbytes = n*sizeof(int);dim3 threads, blocks;float elapsed_time, time_memcpy, time_kernel;int niterations;int num_devices = 0;cudaGetDeviceCount(&num_devices);cudaSetDevice(CUDA_device);cudaDeviceProp device_properties;cudaGetDeviceProperties(&device_properties, CUDA_device);niterations = 5;printf("running on: %s\n\n", device_properties.name);int c = 5;int *a = 0;cudaMallocHost((void**)&a, nbytes);int *d_a = 0, *d_c = 0;cudaMalloc((void**)&d_a, nbytes);cudaMalloc((void**)&d_c, sizeof(int));cudaMemcpy(d_c, &c, sizeof(int), cudaMemcpyHostToDevice);cudaStream_t *streams = (cudaStream_t *)malloc(nstream* sizeof(cudaStream_t));for (int i = 0; i < nstream; i++){cudaStreamCreate(&(streams[i]));}cudaEvent_t start_event, stop_event;cudaEventCreate(&start_event);cudaEventCreate(&stop_event);cudaEventRecord(start_event, 0);cudaMemcpyAsync(a, d_a, nbytes, cudaMemcpyDeviceToHost, streams[0]);cudaEventRecord(stop_event, 0);cudaEventSynchronize(stop_event);cudaEventElapsedTime(&time_memcpy, start_event, stop_event);printf("memcpy:\t%.2f\n", time_memcpy);threads = dim3(512, 1);blocks = dim3(n / threads.x, 1);cudaEventRecord(start_event, 0);init_array << <blocks, threads, 0, streams[0] >> >(d_a, d_c, niterations);cudaEventRecord(stop_event, 0);cudaEventSynchronize(stop_event);cudaEventElapsedTime(&time_kernel, start_event, stop_event);printf("kernel:\t\t%.2f\n", time_kernel);threads = dim3(512, 1);blocks = dim3(n / threads.x, 1);cudaEventRecord(start_event, 0);for (int i = 0; i < nreps; i++){init_array << <blocks, threads >> >(d_a, d_c, niterations);cudaMemcpy(a, d_a, nbytes, cudaMemcpyDeviceToHost);}cudaEventRecord(stop_event, 0);cudaEventSynchronize(stop_event);cudaEventElapsedTime(&elapsed_time, start_event, stop_event);printf("non-stream:\t%.2f(%.2f expected)\n", elapsed_time / nreps, time_kernel + time_memcpy);threads = dim3(512, 1);blocks = dim3(n / (nstream*threads.x), 1);memset(a, 255, nbytes);cudaMemset(d_a, 0, nbytes);cudaEventRecord(start_event, 0);for (int k = 0; k < nreps; k++){for (int i = 0; i < nstream; i++)init_array << <blocks, threads, 0, streams[i] >> >(d_a + i*n / nstream, d_c, niterations);//printf("1");for (int i = 0; i < nstream; i++)cudaMemcpyAsync(a + i*n / nstream, d_a + i*n / nstream, nbytes / nstream, cudaMemcpyDeviceToHost, streams[i]);}cudaEventRecord(stop_event, 0);cudaEventSynchronize(stop_event);cudaEventElapsedTime(&elapsed_time, start_event, stop_event);printf("%d streams:\t%.2f\n", nstream, elapsed_time / nreps);printf("--------------------------------\n");if (correct_data(a, n, c*nreps*niterations))printf("TEST PASSED\n");elseprintf("TEST FAILED\n");for (int i = 0; i < nstream; i++){cudaStreamDestroy(streams[i]);}cudaEventDestroy(start_event);cudaEventDestroy(stop_event);cudaFreeHost(a);cudaFree(d_a);cudaFree(d_c);getchar();cudaThreadExit();}

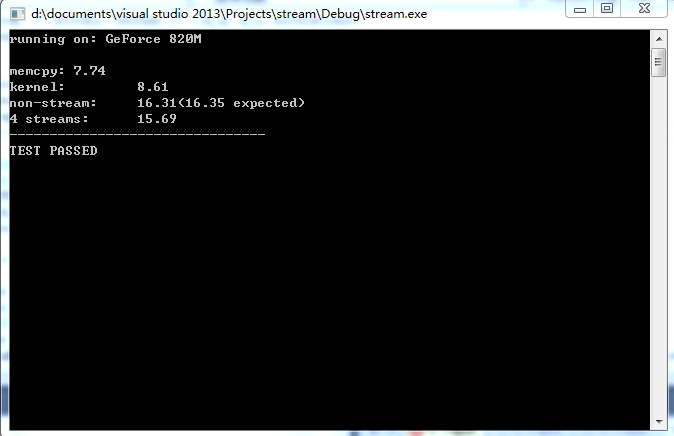

执行结果如下图所示:

第一个时间memcopy表示单次拷贝所需的时间;

第二个表示kernel函数进行计算所用的时间;

第三个non-streamed表示在不使用流的情况下计算与传输的时间;

第四个表示在使用n个流的情况下计算与传输所用的时间;

能看到用减少时间的效果。