蓝色管理系统网站模版/自建网站流程

redis-cluster学习

- 所有redis节点(包括主和从)彼此互联(两两通信),底层使用内部的二进制传输协议,优化传输速度所有redis节点(包括主和从)彼此互联(两两通信),底层使用内部的二进制传输协议,优化传输速度

- 集群中也有主从,也有高可用的逻辑,但是没有哨兵进程,整合到主节点的功能里了;集群中的事件被主节点(大部分主节点);通过主节点的过半选举实现哨兵以前的逻辑

- 客户端与redis-cluster连接,无需关心分片的计算,客户端不在关心分片的计算逻辑,内部分发分布式数据(内部有分片计算逻辑),客户端将key交给redis节点后,集群内部判断key值的正确存储位置,转发存储;

- redis-cluster把所有的主节点对应到[0-16383]整数区间–槽道slot;各自的主节点维护一批槽道号(0-5000,5001-10000,10001-16383);在分片计算时,对key值做hash取模运算(就是取余,不在使用hashCode,CRC16);key值对应的取模运算结果,将会判断由哪个节点维护;将key–slot–node,如果我们想要迁移某个key值,必须将对应的slot一并迁移;

redis-cluster 使用教程

本文用docker-compose部署redis-cluster

docker自行下载windows版本

线上官方文档一份啊官方文档

在你的某文件夹写一份脚本内容如下:

我在桌面写的,命名为create.sh

前提你要安装完git并且配置了环境

变量,怎么安装git可以百度搜一搜

for i in `seq 7001 7006`

domkdir -p ${i}/data

done

双击运行,或者cmd cd到桌面输入create.sh命令运行

创建一个文件docker-compose.yml

version: '3.4'x-image:&default-imagepublicisworldwide/redis-cluster

x-restart:&default-restartalways

x-netmode:&default-netmodehost

services:redis1:image: *default-imagenetwork_mode: *default-netmoderestart: *default-restartvolumes:- D:\\data/redis/7001/data:/dataenvironment:- REDIS_PORT=7001redis2:image: *default-imagenetwork_mode: *default-netmoderestart: *default-restartvolumes:- D:\\data/redis/7002/data:/dataenvironment:- REDIS_PORT=7002redis3:image: *default-imagenetwork_mode: *default-netmoderestart: *default-restartvolumes:- D:\\data/redis/7003/data:/dataenvironment:- REDIS_PORT=7003redis4:image: *default-imagenetwork_mode: *default-netmoderestart: *default-restartvolumes:- d:\\data/redis/7004/data:/dataenvironment:- REDIS_PORT=7004redis5:image: *default-imagenetwork_mode: *default-netmoderestart: *default-restartvolumes:- d:\\data/redis/7005/data:/dataenvironment:- REDIS_PORT=7005redis6:image: *default-imagenetwork_mode: *default-netmoderestart: *default-restartvolumes:- d:\\data/redis/7006/data:/dataenvironment:- REDIS_PORT=7006在d盘会自动创建文件夹

D:\redis-cluster\redis-cluster

路径如上

然后执行命令如下

docker-compose up -d

全部安装

Compose file format Docker Engine release

官网上查这个docker-compose有版本对应关系

Compose file format 3.4的版本对应Docker En

gine release17.09.0+,所以尽量下新版本docker

久等了:接下来部署cluster

docker run --rm -it inem0o/redis-trib create --replicas 1 192.168.99.106:7001 192.168.99.106:7002 192.168.99.106:7003 192.168.99.106:7004 192.168.99.106:7005 192.168.99.106:7006

docker ps

#进入到容器里面看看

docker exec -it 53f32cc98f14 /bin/bash

redis-cli --cluster create 10.0.75.1:7001 10.0.75.1:7002 10.0.75.1:7003 10.0.75.1:7004 10.0.75.1:7005 10.0.75.1:7006 --cluster-replicas 1

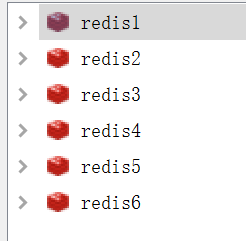

redis desktop management测试一下连接效果

name是自己起的

host是你的ip地址,如果你用的docker,那就连docke-machine的虚拟机的地址哎

端口就是7001-7006

手动连接输入端口、IP地址,输了6次

至于代码

拿台球项目来说,我要把用户的一些信息写入redis缓存中,由于redis存储方式是key-value。我们就存呗,谁怕谁啊。

import com.yuyuka.billiards.service.config.DiamondConfigurtion;

import com.yuyuka.billiards.service.service.lock.JedisLock;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.cloud.client.ServiceInstance;

import org.springframework.cloud.client.discovery.DiscoveryClient;

import org.springframework.cloud.client.serviceregistry.Registration;

import redis.clients.jedis.JedisCluster;import javax.annotation.Resource;

import java.util.List;/*** 服务中心提供基类*/

@Slf4j

public class AbstractbServiceProvider {@Autowiredprivate DiscoveryClient discoveryClient;@Autowiredprivate Registration registration;@Autowiredprotected JedisCluster jedisCluster;@Resourceprotected DiamondConfigurtion getSuperDiamondAll;// 超时时间private final int TIMEOUT = 60;protected void logInstanceInfo() {ServiceInstance instance = serviceInstance();log.info("provider service, host = " + instance.getHost()+ ", service_id = " + instance.getServiceId());}protected ServiceInstance serviceInstance() {List<ServiceInstance> list = discoveryClient.getInstances(registration.getServiceId());if (list != null && list.size() > 0) {return list.get(0);}return null;}protected void lock(String key) {JedisLock jedisLock = new JedisLock(jedisCluster, key, TIMEOUT);jedisLock.lock();}protected void unLock(String key) {JedisLock jedisLock = new JedisLock(jedisCluster, key, TIMEOUT);jedisLock.unlock();}public int getEndPageSize(Integer start, Integer limit, Integer allSize) {return (start + limit) > allSize ? allSize : start + limit - 1;}/*** 经纬度校验* 经度longitude: (?:[0-9]|[1-9][0-9]|1[0-7][0-9]|180)\\.([0-9]{6})* 纬度latitude: (?:[0-9]|[1-8][0-9]|90)\\.([0-9]{6})** @return*/public boolean checkItude(String longitude, String latitude) {String reglo = "((?:[0-9]|[1-9][0-9]|1[0-7][0-9])\\.([0-9]{0,6}))|((?:180)\\.([0]{0,6}))";String regla = "((?:[0-9]|[1-8][0-9])\\.([0-9]{0,6}))|((?:90)\\.([0]{0,6}))";longitude = longitude.trim();latitude = latitude.trim();return longitude.matches(reglo) == true ? latitude.matches(regla) : false;}

}在service中提供一个基类,其中运用了反射,其他service都可以继承这个积累,提高效率

import com.yuyuka.billiards.service.cache.CacheKeyEnum;

import com.yuyuka.billiards.service.domain.CompetitionStatisticsBasics;

import com.yuyuka.billiards.service.service.AbstractbServiceProvider;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Component;@Slf4j

@Component

public class CompetitionStatisticsServiceProvider extends AbstractbServiceProvider {/*** 记录用户的总场次* @param competitionStatisticsBasics* @return*/public Boolean addTotalNumbers(CompetitionStatisticsBasics competitionStatisticsBasics) throws Exception {try {jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_NUMER.createKey(competitionStatisticsBasics.getUserId1().toString()), 1);jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_NUMER.createKey(competitionStatisticsBasics.getUserId2().toString()), 1);}catch (Exception e){log.error("记录用户的总场次异常 ",e);throw new Exception("redis 插入异常");}return true;}/*** 记录用户的总局数* @param competitionStatisticsBasics* @return* @throws Exception*/public Boolean addTotalNumber(CompetitionStatisticsBasics competitionStatisticsBasics)throws Exception {try{jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_GROUPNUMBER.createKey(competitionStatisticsBasics.getUserId1().toString()),1);jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_GROUPNUMBER.createKey(competitionStatisticsBasics.getUserId2().toString()),1);}catch(Exception e){log.error("记录用户的总局数异常",e);throw new Exception("redis 插入异常");}return true;}/*** 记录用户的胜场数*/public Boolean addWinNumber(CompetitionStatisticsBasics competitionStatisticsBasics) throws Exception {try{jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_WINNUMBER.createKey(competitionStatisticsBasics.getUserId1().toString()),1);jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_WINNUMBER.createKey(competitionStatisticsBasics.getUserId2().toString()),1);}catch(Exception e){log.error("记录用户胜利场次数异常",e);throw new Exception("redis 插入异常");}return true;}/*** 记录用户失败总场次*/public Boolean addFailNumber(CompetitionStatisticsBasics competitionStatisticsBasics)throws Exception {try{jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_FAILNUMBVER.createKey(competitionStatisticsBasics.getUserId1().toString()),1);jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_FAILNUMBVER.createKey(competitionStatisticsBasics.getUserId2().toString()),1);}catch(Exception e){log.error("记录用户失败场次数异常",e);throw new Exception("redis 插入异常");}return true;}/*** 记录用户累计时长*/public Boolean addTotalTime(CompetitionStatisticsBasics competitionStatisticsBasics)throws Exception{try{jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_TIME.createKey(competitionStatisticsBasics.getUserId1().toString().toString()),1);jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_TOTAL_TIME.createKey(competitionStatisticsBasics.getUserId2().toString()),1);}catch(Exception e){log.error("记录用户累计时长异常",e);throw new Exception("redis 插入异常");}return true;}public Boolean addKoUsers(CompetitionStatisticsBasics competitionStatisticsBasics)throws Exception {try{jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_KO_USERS.createKey(competitionStatisticsBasics.getUserId1().toString()),1);jedisCluster.incrBy(CacheKeyEnum.BILLIARDS_COMPETITION_KO_USERS.createKey(competitionStatisticsBasics.getUserId2().toString()),1);}catch(Exception e){log.error("记录互相ko的两对手",e);throw new Exception("redis 插入异常");}return true;}

}主要讲一下这incrBy这个方法,源码如下

@Overridepublic Long incrBy(final String key, final long integer) {return new JedisClusterCommand<Long>(connectionHandler, maxAttempts) {@Overridepublic Long execute(Jedis connection) {return connection.incrBy(key, integer);}}.run(key);}

@Overridepublic long incrBy(String key, long incr) throws Exception {ShardedJedis shardedJedis = null;boolean broken = false;try {shardedJedis = shardedJedisPool.getResource();boolean isExist = shardedJedis.exists(key);if (!isExist) {shardedJedis.set(key, 0 + "");}long result = shardedJedis.incrBy(key, incr);return result;} catch (JedisException e) {broken = handleJedisException(e);throw e;} finally {closeResourse(shardedJedis, broken);}}}Redis Incrby 命令将 key 中储存的数字加上指定的增量值。如果 key 不存在,那么 key 的值会先被初始化为 0 ,然后再执行 INCRBY 命令。

大家注意到CompetitionStatisticsBasics和CacheKeyEnum了吗?展示分别如下两图代码:

import com.yuyuka.billiards.api.type.CompetitionTypeEnum;

import lombok.Data;import java.math.BigDecimal;

import java.util.Date;@Data

public class CompetitionStatisticsBasics implements java.io.Serializable{// 比赛类型public CompetitionTypeEnum competitionTypeEnum;// 开始时间public Date beginDate;// 结束时间public Date endDate;// 参与者1public BigDecimal userId1;// 参与者2public BigDecimal userId2;// 参与者1比分public Integer userId1Point;//参与者2比分public Integer userid2Point;

}基础类定义一些实例对象,运用了@Data简化了代码,作用就是省去了get(),set(),toStirng()之流。

// 用户比赛统计BILLIARDS_COMPETITION_TOTAL_NUMER("com:yuyuka:billiards:cache:competition:total:number","用户比赛总场次"),BILLIARDS_COMPETITION_TOTAL_WINNUMBER("com:yuyuka:billiards:cache:competition:total:winnumber","用户胜利总场次"),BILLIARDS_COMPETITION_TOTAL_GROUPNUMBER("com:yuyuka:billiards:cache:competition:total:groupynumber","用户比赛总局数"),BILLIARDS_COMPETITION_TOTAL_FAILNUMBVER("com:yuyuka:billiaeds:cache:competition:total:failnumber","用户失败总场数"),BILLIARDS_COMPETITION_TOTAL_TIME("com:yuyuka:billiaeds:cache:competition:total:time","用户累计时长"),BILLIARDS_COMPETITION_KO_USERS("com:yuyuka:billiaeds:cache:competition:ko:users","两个用户对战");

enum枚举这个就定义了变量可能取到的值。

后来我又换到docker for windows 用hyper启动,以上方法会报错,创建集群的时候报连接超时,我一直没解决只能换个yml文件

version: '3.4'x-image:&default-imagepublicisworldwide/redis-clusterservices:redis1:image: *default-imagevolumes:- /data/redis/7001/data:/dataenvironment:- REDIS_PORT=7001ports:- "7001:7001"networks:hx_net: ipv4_address: 10.0.75.1redis2:image: *default-imagevolumes:- /d/redis-cluster/7002/data:/dataenvironment:- REDIS_PORT=7002ports:- "7002:7002"networks:hx_net: ipv4_address: 10.0.75.2redis3:image: *default-imagevolumes:- /d/redis-cluster/7003/data:/dataenvironment:- REDIS_PORT=7003ports:- "7003:7003"networks:hx_net: ipv4_address: 10.0.75.3redis4:image: *default-imagevolumes:- /d/redis-cluster/7004/data:/dataenvironment:- REDIS_PORT=7004ports:- "7004:7004"networks:hx_net: ipv4_address: 10.0.75.4redis5:image: *default-imagevolumes:- /d/redis-cluster/data:/dataenvironment:- REDIS_PORT=7005ports:- "7005:7005"networks:hx_net: ipv4_address: 10.0.75.5redis6:image: *default-imagevolumes:- /d/redis-cluster/7006/data:/dataenvironment:- REDIS_PORT=7006ports:- "7006:7006"networks:hx_net: ipv4_address: 10.0.75.6

networks:hx_net:driver: bridgeipam:config:- subnet: 10.0.75.0/16

以 10.0.75.0兜底,依次分配ip,不同ip不同端口

PS D:\redis-cluster> docker-compose up -d

Removing redis-cluster_redis1_1

Removing redis-cluster_redis3_1

Removing redis-cluster_redis4_1

Removing redis-cluster_redis5_1

Removing redis-cluster_redis6_1

Recreating redis-cluster_redis2_1 ... done

Recreating 266c649ecbcf_redis-cluster_redis4_1 ... done

Recreating 66d0f14d82ab_redis-cluster_redis1_1 ... done

Recreating 9483317793a7_redis-cluster_redis6_1 ... done

Recreating ce493defa180_redis-cluster_redis3_1 ... done

Recreating b5a7e8aa78fa_redis-cluster_redis5_1 ... done

PS D:\redis-cluster> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1e9a21e2653e publicisworldwide/redis-cluster "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 6379/tcp, 7000/tcp, 0.0.0.0:7002->7002/tcp redis-cluster_redis2_1

5955a961f19c publicisworldwide/redis-cluster "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 6379/tcp, 7000/tcp, 0.0.0.0:7005->7005/tcp redis-cluster_redis5_1

3ef2e6ddf1ab publicisworldwide/redis-cluster "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 6379/tcp, 7000/tcp, 0.0.0.0:7003->7003/tcp redis-cluster_redis3_1

15b746bdcde3 publicisworldwide/redis-cluster "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 6379/tcp, 7000/tcp, 0.0.0.0:7001->7001/tcp redis-cluster_redis1_1

7d3f52b636a5 publicisworldwide/redis-cluster "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 6379/tcp, 7000/tcp, 0.0.0.0:7006->7006/tcp redis-cluster_redis6_1

5c3b5b1930f0 publicisworldwide/redis-cluster "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 6379/tcp, 7000/tcp, 0.0.0.0:7004->7004/tcp redis-cluster_redis4_1

368bb3fed26d mysql:5.7 "docker-entrypoint.s…" 26 hours ago Up 3 hours 0.0.0.0:3306->3306/tcp, 33060/tcp mysql

PS D:\redis-cluster> docker exec -it 1e9a21e2653e /bin/bash

root@1e9a21e2653e:/data#

root@1e9a21e2653e:/data# cd /usr/local/

root@1e9a21e2653e:/usr/local# ls

d bin etc games include lib man sbin share src

root@1e9a21e2653e:/usr/local# cd bin/

root@1e9a21e2653e:/usr/local/bin# ls

docker-entrypoint.sh entrypoint.sh gosu redis-benchmark redis-check-aof redis-check-rdb redis-cli redis-sentinel redis-server

root@1e9a21e2653e:/usr/local/bin# ./redis-cli -c -h 10.0.75.2 -p 7002

10.0.75.2:7002> info

# Server

redis_version:5.0.0

redis_git_sha1:00000000

redis_git_dirty:0

redis_build_id:9a5fa86bdce33ad2

redis_mode:cluster

os:Linux 4.9.125-linuxkit x86_64

arch_bits:64

multiplexing_api:epoll

atomicvar_api:atomic-builtin

gcc_version:6.3.0

process_id:1

run_id:00b2fb94ff2d4cc317b613ef229f8b2730922df5

tcp_port:7002

uptime_in_seconds:203

uptime_in_days:0

hz:10

configured_hz:10

lru_clock:12398754

executable:/data/redis-server

config_file:/usr/local/etc/redis.conf# Clients

connected_clients:1

client_recent_max_input_buffer:4

client_recent_max_output_buffer:4100800

blocked_clients:0# Memory

used_memory:1512072

used_memory_human:1.44M

used_memory_rss:4620288

used_memory_rss_human:4.41M

used_memory_peak:5611848

used_memory_peak_human:5.35M

used_memory_peak_perc:26.94%

used_memory_overhead:1498862

used_memory_startup:1449176

used_memory_dataset:13210

used_memory_dataset_perc:21.00%

allocator_allocated:1985072

allocator_active:2293760

allocator_resident:11800576

total_system_memory:5170438144

total_system_memory_human:4.82G

used_memory_lua:37888

used_memory_lua_human:37.00K

used_memory_scripts:0

used_memory_scripts_human:0B

number_of_cached_scripts:0

maxmemory:0

maxmemory_human:0B

maxmemory_policy:noeviction

allocator_frag_ratio:1.16

allocator_frag_bytes:308688

allocator_rss_ratio:5.14

allocator_rss_bytes:9506816

rss_overhead_ratio:0.39

rss_overhead_bytes:18446744073702371328

mem_fragmentation_ratio:3.14

mem_fragmentation_bytes:3150216

mem_not_counted_for_evict:0

mem_replication_backlog:0

mem_clients_slaves:0

mem_clients_normal:49686

mem_aof_buffer:0

mem_allocator:jemalloc-5.1.0

active_defrag_running:0

lazyfree_pending_objects:0# Persistence

loading:0

rdb_changes_since_last_save:0

rdb_bgsave_in_progress:0

rdb_last_save_time:1572679639

rdb_last_bgsave_status:ok

rdb_last_bgsave_time_sec:-1

rdb_current_bgsave_time_sec:-1

rdb_last_cow_size:0

aof_enabled:1

aof_rewrite_in_progress:0

aof_rewrite_scheduled:0

aof_last_rewrite_time_sec:-1

aof_current_rewrite_time_sec:-1

aof_last_bgrewrite_status:ok

aof_last_write_status:ok

aof_last_cow_size:0

aof_current_size:0

aof_base_size:0

aof_pending_rewrite:0

aof_buffer_length:0

aof_rewrite_buffer_length:0

aof_pending_bio_fsync:0

aof_delayed_fsync:0# Stats

total_connections_received:1

total_commands_processed:1

instantaneous_ops_per_sec:0

total_net_input_bytes:31

total_net_output_bytes:11468

instantaneous_input_kbps:0.00

instantaneous_output_kbps:6.93

rejected_connections:0

sync_full:0

sync_partial_ok:0

sync_partial_err:0

expired_keys:0

expired_stale_perc:0.00

expired_time_cap_reached_count:0

evicted_keys:0

keyspace_hits:0

keyspace_misses:0

pubsub_channels:0

pubsub_patterns:0

latest_fork_usec:0

migrate_cached_sockets:0

slave_expires_tracked_keys:0

active_defrag_hits:0

active_defrag_misses:0

active_defrag_key_hits:0

active_defrag_key_misses:0# Replication

role:master

connected_slaves:0

master_replid:3f81ca3ebde99c384b3ef02e33597f8fd359e4ab

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:0

second_repl_offset:-1

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0# CPU

used_cpu_sys:0.330000

used_cpu_user:0.170000

used_cpu_sys_children:0.000000

used_cpu_user_children:0.000000# Cluster

cluster_enabled:1

最下面的cluster_enabled :1 表示集群已经启动了

root@1e9a21e2653e:/usr/local/bin# redis-cli --cluster create 10.0.75.1:7001 10.0.75.2:7002 10.0.75.3:7003 10.0.75.4:7004 10.0.75.5:7005 10.0.75.6:7006 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.0.75.4:7004 to 10.0.75.1:7001

Adding replica 10.0.75.5:7005 to 10.0.75.2:7002

Adding replica 10.0.75.6:7006 to 10.0.75.3:7003

M: 6ab68467d34c533cdd2f79d637ac459f546119bd 10.0.75.1:7001slots:[0-5460] (5461 slots) master

M: 1c643cf17b2376e823139af7f13424b6c2bcd0e5 10.0.75.2:7002slots:[5461-10922] (5462 slots) master

M: 26ce42711b94ab277f4158c7356ff27478cf0d7c 10.0.75.3:7003slots:[10923-16383] (5461 slots) master

S: d63b62446074dbc95a4e34d921d17694686de71f 10.0.75.4:7004replicates 6ab68467d34c533cdd2f79d637ac459f546119bd

S: 7f1520ce7ca1c9afbfb7eee9c45a1bf3a3bf43a1 10.0.75.5:7005replicates 1c643cf17b2376e823139af7f13424b6c2bcd0e5

S: b65852d145d280dca98426c5af8b153979885c8a 10.0.75.6:7006replicates 26ce42711b94ab277f4158c7356ff27478cf0d7c

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

....

>>> Performing Cluster Check (using node 10.0.75.1:7001)

M: 6ab68467d34c533cdd2f79d637ac459f546119bd 10.0.75.1:7001slots:[0-5460] (5461 slots) master1 additional replica(s)

M: 26ce42711b94ab277f4158c7356ff27478cf0d7c 10.0.75.3:7003slots:[10923-16383] (5461 slots) master1 additional replica(s)

S: d63b62446074dbc95a4e34d921d17694686de71f 10.0.75.4:7004slots: (0 slots) slavereplicates 6ab68467d34c533cdd2f79d637ac459f546119bd

S: b65852d145d280dca98426c5af8b153979885c8a 10.0.75.6:7006slots: (0 slots) slavereplicates 26ce42711b94ab277f4158c7356ff27478cf0d7c

M: 1c643cf17b2376e823139af7f13424b6c2bcd0e5 10.0.75.2:7002slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: 7f1520ce7ca1c9afbfb7eee9c45a1bf3a3bf43a1 10.0.75.5:7005slots: (0 slots) slavereplicates 1c643cf17b2376e823139af7f13424b6c2bcd0e5

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

root@1e9a21e2653e:/usr/local/bin#

这样就启动成功了,废了我好大的劲啊