做响应式网站字体需要响应么/seo模拟点击软件

文章目录

- 前言

- 一、数据介绍

- 1. kaggle比赛介绍

- 2. 数据集介绍

- 二、数据清洗

- 1. 数据集清洗

- 2. 数据可视化

- 三、模型训练和测试

- 结果

前言

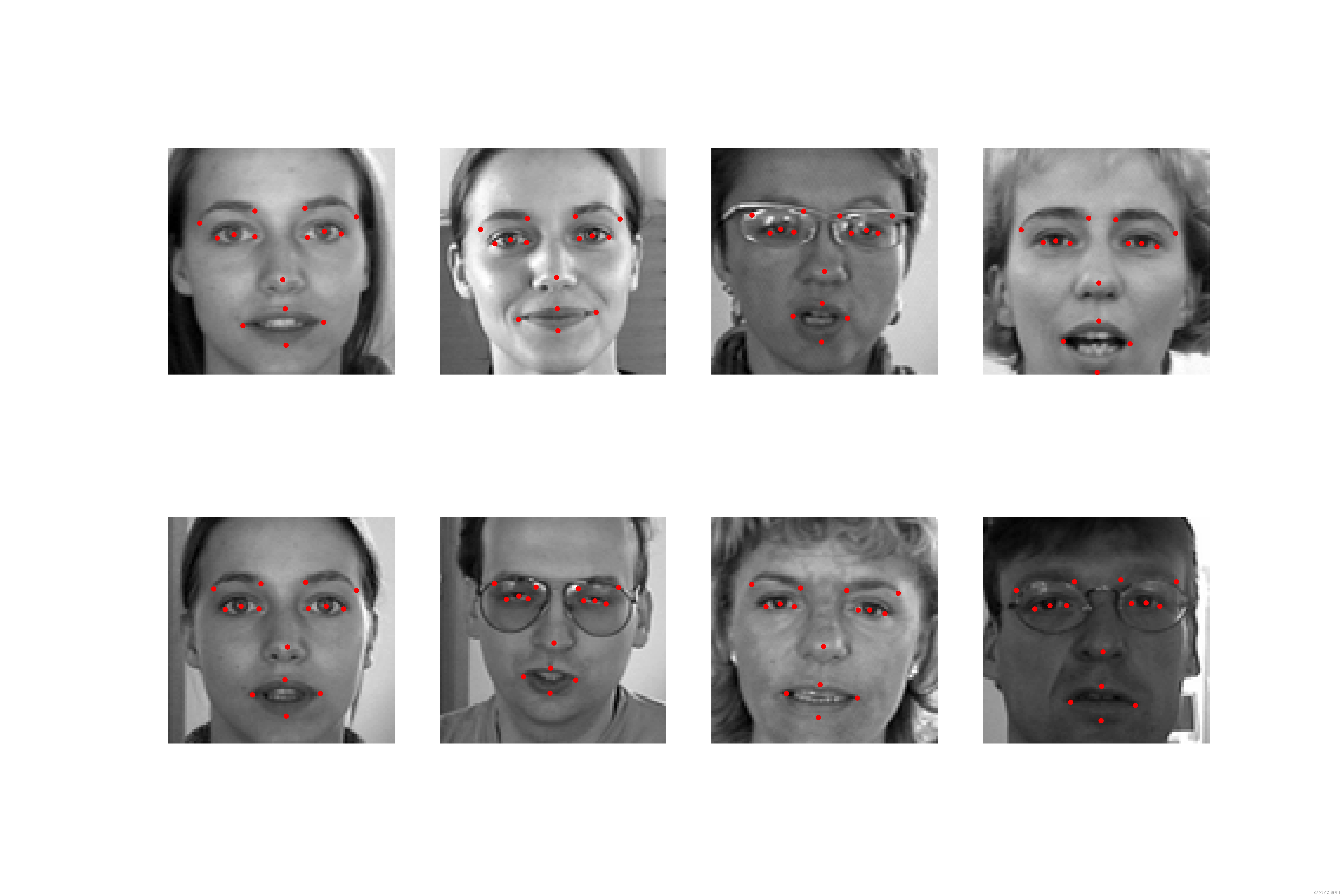

人脸关键点检测是人脸后续处理算法的基础。在本文,基于kaggle的关键点检测的挑战赛尝试了对人脸15个关键点进行检测。

一、数据介绍

1. kaggle比赛介绍

本文章的数据来自五年前kaggle比赛中检测面部关键点的比赛。当时比赛的目的希望通过人脸的关键点检测实现: 1. 实现对视频和图像中的人脸的追踪 2.分析面部表情 3. 检测畸形面部体征以进行医学诊断 4. 生物识别/人脸识别 。检测面部关键点是一个非常具有挑战性的问题。 面部特征因人而异,即使对于单个人,也会因 3D 姿势、大小、位置、视角和光照条件而存在大量差异。 计算机视觉研究在解决这些困难方面取得了长足的进步,但仍有许多改进的机会。

比赛链接:https://www.kaggle.com/c/facial-keypoints-detection

2. 数据集介绍

标注方式描述: 每个关键点都由像素空间中的(x,y)实值对指定。每个人脸对象对应15个关键点,分别代表人脸的如下元素:left_eye_center, right_eye_center, left_eye_inner_corner, left_eye_outer_corner, right_eye_inner_corner, right_eye_outer_corner, left_eyebrow_inner_end, left_eyebrow_outer_end, right_eyebrow_inner_end, right_eyebrow_outer_end, nose_tip, mouth_left_corner, mouth_right_corner, mouth_center_top_lip, mouth_center_bottom_lip。每张图片被保存为96*96的大小,用0~255的数值表示对应像素点的灰度值。

数据格式描述:

训练集: 一共包含7049张图片。每一行包含了对应的15个关键点坐标(x,y)和对应图片像素集合对应的行向量。

测试集: 一共包含1783张图片。每一行对应了图片的序号和像素集合

数据集链接:https://www.kaggle.com/c/facial-keypoints-detection/data

(温馨提示:下载前需要先注册kaggle账号)

由于测试集的标签稍微给出,在本文中仅对训练集进行划分。

二、数据清洗

1. 数据集清洗

在kaggle提供的训练集中有相当一部分的关键点是空缺的,所以需要先对数据中的无效数据进行清除。先读取提高的训练集,并保存为train_without_nan.csv

2. 数据可视化

使用该操作对训练集中的部分数据进行读取,并将其可视化出来。

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import matplotlib.pyplot as plt

train_dir = 'training.csv' # 读取训练图片数据集

train_data = pd.read_csv(train_dir)

print("Size of train data: " + str(len(train_data)) + '*' + str(len(train_data.columns))) #查看训练集尺寸

print(train_data.info()) # 查看训练集信息

train = train_data.dropna() # 删除缺失行

train.info()

train.to_csv('train_without_nan.csv') # 将删除了缺失项的序列进行保存

X=[]

Y=[]

for img in train['Image']: # 读取图片序列X.append(np.asarray(img.split(),dtype=float).reshape(96,96,1))

X=np.reshape(X,(-1,96,96,1))

X = np.asarray(X).astype('float32')

for i in range(len((train))): # 读取标签Y.append(np.asarray(train.iloc[i][0:30].to_numpy()))

Y = np.asarray(Y).astype('float32')

disp=8

fig,axes=plt.subplots(int((disp+3)/4),4,figsize=(15,10))

for i in range(disp):axes[int(i/4),i%4].axis('off')axes[int(i/4),i%4].imshow(X[i+8].reshape(96,96),cmap='gray')axes[int(i/4),i%4].scatter([train[train.columns[2*j]][i+8] for j in range(15)],[train[train.columns[2*j+1]][i+8] for j in range(15)],s=10,c='r')

plt.savefig('emotion_face_temp.jpg',dpi=500)

三、模型训练和测试

使用了warm up的学习率调整策略,使用resnet作为特征提取的主干网络,预先导入了选练权重,使用全连接层将1000个输出转换了30个坐标值的输出。

# -*-coding:utf-8-*-

import numpy as np

import torch

import pandas as pd

import matplotlib.pyplot as plt

import cv2

from math import sin, cos, pi

from keypoint_detect_network import FACERegNet

from dataset import Face_dataset,FacialKeypoiuntsTrainDataset,FacialKeypoiuntsTestDataset

from torch import nn, optim

from torch.utils.data import DataLoader,random_split,ConcatDataset

from torchvision.transforms import transforms

# from transform import RandomTranslation,RandomVerticalFlip,ToTensor

from transform import *

from predict import *

import math

import warnings

# from torchvision.models import resnet34,mobilenetv3

from model_v3 import mobilenet_v3_large,MobileNetV3,mobilenet_v3_small

warnings.filterwarnings('ignore')

from tqdm import tqdm#-----------------------------------------#

#对输入数据进行转换

#-----------------------------------------#

x_transforms = transforms.Compose([transforms.Resize((96, 96)), # 缩小对应到UNET的输入接口大小transforms.ToTensor(), # 转换成对应的tensor型数据# transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])transforms.Normalize((0.5,), (0.5,)) # 归一化操作,0.1307均值 0.3081标准差

])#----------------------------------------#

#学习率调整函数

#----------------------------------------#

def warmup_learning_rate(optimizer,iteration): # warmup函数在上升阶段的预热函数lr_ini = 0.0001for param_group in optimizer.param_groups:param_group['lr'] = lr_ini+(initial_lr - lr_ini)*iteration/100def cosin_deacy(optimizer,lr_base,current_epoch,warmup_epoch,global_epoch): # 余弦退火函数设计lr_new = 0.5 * lr_base*(1+np.cos(np.pi*(current_epoch - warmup_epoch)/np.float(global_epoch-warmup_epoch)))for param_group in optimizer.param_groups:param_group['lr'] = lr_newdef adjust_learning_rate(optimizer, gamma, step):"""Sets the learning rate to the initial LR decayed by 10 at everyspecified step# Adapted from PyTorch Imagenet example:# https://github.com/pytorch/examples/blob/master/imagenet/main.py"""lr = initial_lr * (gamma ** (step))for param_group in optimizer.param_groups:param_group['lr'] = lrdef warmup_learning_rate(optimizer,iteration):lr_ini = 0.000001for param_group in optimizer.param_groups:param_group['lr'] = lr_ini+(initial_lr - lr_ini)*iteration/100#-----------------------------------------#

#对数据进行训练

#-----------------------------------------#

def train(epoch_num,train_dataset):count = 0device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")# print("ok")criterion = nn.L1Loss()# initial_lr = 0.0001net = nn.Sequential(nn.Conv2d(1,3,3,padding =1),resnet50(pretrained=True),nn.Linear(1000,100),nn.Linear(100,30))# net = FACERegNet(1)net = torch.nn.DataParallel(net)net = net.to(device)optimizer = optim.Adam(net.parameters(), lr=initial_lr)for epoch in range(epoch_num): trainloader = DataLoader(train_dataset,batch_size=batch_size,shuffle=True, num_workers=num_workers)loss_sum = 0count = 0aver = 0for imgs, labels in tqdm(trainloader):imgs = imgs.to(device)labels = labels.to(device)shape = labels.shapeoptimizer.zero_grad()predict = net(imgs)outputs = predict.squeeze()outputs = outputs.reshape(shape)loss0 = criterion(outputs, labels)loss0.backward()optimizer.step()if epoch == warmup_epoch:lr_base = optimizer.state_dict()['param_groups'][0]['lr']if epoch >= warmup_epoch:cosin_deacy(optimizer, lr_base, epoch,warmup_epoch, global_epoch)loss_sum += loss0.item()count = count + 1aver = loss_sum / countprint("#------------------------------#")print("epoch:%d aver_loss:%.5f"%(epoch,aver))print("current learning_rate:%.9f"%optimizer.param_groups[0]["lr"])torch.save(net.state_dict(), "./weights/train_resnet50.pth")print("Finish Training...")def test(test_dataset,weight_path):# net = FACERegNet(1)net = nn.Sequential(nn.Conv2d(1,3,3,padding =1),mobilenet_v3_small(),nn.Linear(1000,100),nn.Linear(100,30))net = torch.nn.DataParallel(net)net = net.to(device)net.load_state_dict(torch.load(weight_path))count = 0MSE = []for img,pix in tqdm(test_dataset):temp_sum = 0img = img.unsqueeze(0)img_points = net(img)img_points = img_points.cpu().detach().numpy()img_points_flatten = img_points.reshape(-1)pix = pix.numpy()pix_flatten = pix.reshape(-1)for i in range(len(pix_flatten)):temp = (img_points_flatten[i] - pix_flatten[i])**2temp_sum = temp_sum + tempaver_temp = temp_sum / len(pix_flatten)rmse = math.sqrt(aver_temp)MSE.append(rmse)count = count + 1print('#----image_id:%d------MSE:%5f----------------------#'%(count+1,rmse))aver_MSE = np.mean(np.array(MSE))print("#aver_mse of %d images is: %5f-------------------------#"%(len(test_dataset),aver_MSE))if __name__ == '__main__':

#-----------------------------------------#

#读取数据

#-----------------------------------------#all_dataset = FacialKeypoiuntsTrainDataset('train_pure.csv',transform=transforms.Compose([# RandomVerticalFlip(0.5),# RandomTranslation((0.2, 0.2)),ToTensor(),]))torch.manual_seed(0)total_length = len(all_dataset)test_length = np.int(total_length/5) # 将训练集和测试集按1:4划分train_length = total_length-test_length train_dataset,test_dataset = random_split(dataset=all_dataset,lengths=[train_length,test_length])

#-----------------------------------------#

#初始权重设置

#-----------------------------------------#initial_lr = 0.001batch_size = 50global_epoch = 2000 warmup_epoch = 200num_workers = 16weight_path = "./weights/train_resnet50.pth"device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")train(global_epoch,train_dataset)test(test_dataset,weight_path)

结果

如果感觉有用,麻烦各位大佬点个赞,谢谢,best wishes!